Exploring the Challenges of Automated Music Videos with OpenArt

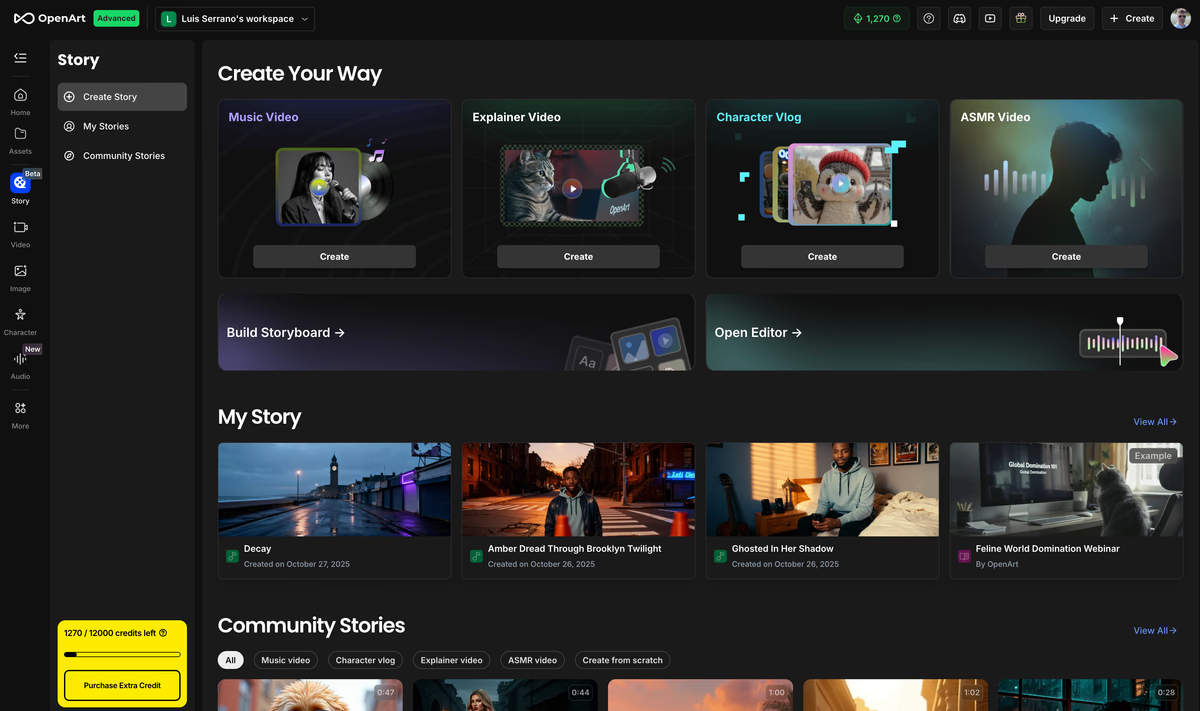

As an avid explorer of AI tools, I recently dove into OpenArt’s platform to create automated music videos, starting with the basic tier (4000 credits). But soon I had to upgrade to the Advanced tier (€29/month) and its 12000 credits. You’ll understand why in a minute. My initial goal was a theatrical rock/hip-hop fusion video set in New York City, featuring a character (@Jay) laughing and the woman he is singing to. After discovering how many credits are really needed, I shifted to a shorter version of my experiment with lip-sync. After a challenging journey and disappointing results, I tried my luck and decided to test the “lyrics video” feature with a different song. This post outlines the hurdles I encountered, providing a grounded analysis for creators considering similar projects.

Initial Experiment: Narrative Video with @Jay

The first and most important challenge is that this tool does not let you provide a full storyboard. It allows you to type a short storyline, and then pay a good amount of credits so it will prepare the storyboard for you, which is image based. So generating all those still images (first frames) consume credits, obviously. It would be far better to first focus on the story, not the images. Or at least let the author come up with the storyboard, so the tool can analyze and create the images based on it. After creating the FIRST storyboard, I didn’t have enough credits to create a full music video, so I decided to give the platform a chance and I upgraded my subscription.

Upgrading from the Essential (€14) to the Advanced plan was seamless, with only the €15 difference charged. The Advanced tier unlocked 8 character slots, premium models like Kling and Veo, and a 12,000-credit pool. I began with a narrative-style video, using a 378-character prompt and Seedream 4.0 for image generation. The initial storyboard cost 750 credits, but it only included @Jay, with the second character (the woman) missing despite the 8-character unlock. Disappointed by the single-character output and the credit burn, I discarded this approach.

I then opted for the lip-sync video option, which required recreating the storyboard—another credit expense. However, this mode limits input to a location and a “vibe” (not even the storyline input I was asked in the narrative style video). Tt generates a tweakable storyboard at additional cost. The video model in this kind of videos is limited to OpenArt LipSync, not the premium Kling or Veo models, further restricting quality. After investing more credits in this setup, the results were lackluster—awkward poses and really poor lip-sync. I am guessing the issues with lip-sync are mostly due to one particular missing feature: you can’t upload, type or ammend the lyrics in any way, which seems odd because the whole point is making a music video. You NEED the lyrics, trusting the model to understand sung words is really naive. Or intentional, because fixing lyrics consumes a lot of credits. I abandoned this effort and moved on.

These are the results:

Here’s the song, if you liked it!

Shift to Lyrics Video Feature with a New Song

Seeking to explore further, I switched to a different song to evaluate the “lyrics video” feature, uncovering a new set of challenges.

Challenge 1: Lyric Extraction and Correction

The lyrics video tool attempted to extract lyrics from a Suno song link but offered no option to upload lyrics or an SRT file. It misread words—e.g., “we’re” as “were”—and occasionally dropped parts of sentences, requiring frame-by-frame corrections. Each fix cost 15 credits, with re-animation adding 100 credits per segment. Approximately 30% of the initial frames needed reworking, driving up the credit expenditure significantly. The lyrics were literally in the Suno link, so not reading them was either a really bad oversight from the product team. Creators need to provide the lyrics, specially when they upload music files.

Challenge 2: Performance and User Experience

As corrections accumulated, the platform’s performance degraded. On my 32 GB Mac Mini M4 (16-core CPU), the interface slowed, with page loads becoming increasingly sluggish. This unoptimized rendering likely strained the hardware, turning a time-saving tool into a labor-intensive process.

Challenge 3: Credit Consumption and Scalability

The lyrics video experiment consumed approximately 10,285 credits—over 85% of the monthly allocation—leaving just 1,715 credits initially, now down to 1,715 after further fixes. This included the base cost for generation, the 750 credits for the narrative storyboard, additional credits for the lip-sync storyboard, and costs for error corrections. The steep cost curve, especially for complex lyrics, underscores the need for careful planning, particularly with the annual commitment (€84) locking users into these dynamics.

Challenge 4: Editing and Timeline Disruptions

The issues extended into the editing phase. Some reworked videos failed to refresh, retaining old frames with grammar mistakes in the final montage despite selecting corrected versions. Manual replacement was necessary, but the UX worsened—my browser choked after a few edits, likely due to memory strain. Editing also introduced random, unseen clips, disrupting timing. To address this, I had to switch to the storyboard view, delete unwanted clips, return to the timeline, and adjust clip speeds (as some were too short for their lyric segments), adding further complexity and credit use.

Reflections on the Process

The high-quality Seedream 4.0 images highlight OpenArt’s strength in visual generation, making it a solid choice for static content. However, the video features—narrative and lip-sync modes, plus the lyrics video—expose limitations in character consistency, lyric accuracy, and editing reliability. The need for manual fixes, performance lag, and timeline issues suggest the tool is still evolving, particularly for music video production.

For sake of comparison, Neural Frames ($19/month) addresses many of these challenges with SRT and lyric uploads, precise lip-sync, smoother performance, and better timeline control. However, its image model falls short of Seedream 4.0’s cinematic quality, presenting a trade-off for video-focused creators.

Conclusion: The Reality of Automated Music Videos

This experience reveals that automated music videos remain an unsolved challenge. The notion of writing a prompt and clicking a button—often critiqued by AI detractors—is far from reality. While OpenArt excels in image generation, the labor-intensive fixes, credit costs, and editing hurdles demonstrate the effort required for quality output. For short, casual “AI slop” clips, automation might work. However, for polished ads, music videos, or short films, the process demands significant work, with no true shortcuts. This manual involvement could be a strength, ensuring human creativity guides the final product rather than relying solely on AI approximations. As tools develop, I encourage creators to treat them as collaborators, not magic solutions, and approach with thorough testing before committing.

And now, I’ll upscale the 1080p lyrics video I managed to finish, and I will upload it to YouTube in 4k. Then I will cancel my subscription, maybe I’ll be back if they fix the issues I have found, and if they optimize for FULL music videos. They should offer ways to reduce costs as well, but getting the lyrics right (by allowing us to edit/upload them) and allowing creators to provide detailed storyboards would help a lot. By the way, the automatic storyboards these tools provide (even Neural Frames) are weird, overly abstract, trippy and well, non-sense. So the “autopilot” mode for videos isn’t too useful at the moment.

What do you think?